While the first computers came about before its invention, the silicon microchip is the advancement that made the modern computer era possible. The ability to create a miniaturized circuit board out of this semiconductor was what gave computers vast advancements in speed and accuracy, transforming them from room-sized devices to machines that could sit on a desk or your lap.

Early Circuit Design

Video of the Day

Early computers used devices called vacuum tubes in their circuit designs, which served as gates to switch currents on and off to direct the computer's function and store information. These were fragile components, however, and frequently failed during normal operation. In 1947, the invention of the transistor replaced the vacuum tube in computer design, and these small components required a semi-conductive material to function. Early transistors contained germanium, but eventually silicon became the semiconductor of choice for computer architects.

Video of the Day

Silicon's Advantages

As a semiconductor, silicon has electrical properties that lie between conductors and resistors. Manufacturers can chemically alter the base silicon to change its electrical properties, making it conduct electricity depending on the specific needs of the unit. This allowed computer designers to create many of their components out of the same material, instead of requiring separate wires and other materials to achieve the same results.

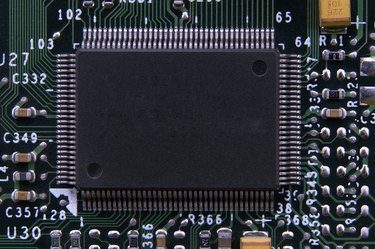

The Microchip

Unfortunately, the complex circuitry required for powerful computers still meant the devices had to be extremely large. In 1958, however, Jack Kilby came up with the idea of creating the circuitry that made up a computer in miniature, using a single block of semiconductor and printing the circuit on top in metal instead of creating the circuit out of separate wires and components. Six months later, Robert Noyce came up with the idea of laying the metal over the semiconductor and then etching away the unnecessary parts to create the integrated circuit. These advancements greatly reduced the size of computer circuits, and made it possible to mass-produce them for the first time.

Silicon Chip Fabrication

Today, silicon chip fabricators use high-powered ultraviolet light to etch their chips. After placing a photosensitive film on a silicon wafer, the light shines through a circuit mask and brands the film in the image of the circuit design. The manufacturer cuts away the unprotected areas, and then lays down another layer of silicon and repeats the process. Finally, one last layer of film identifies the metal circuitry that caps off the chip, completing the electrical circuit. Modern silicon chips can contain many different layers with different electrical properties, in order to suit the electrical needs of the computer design.