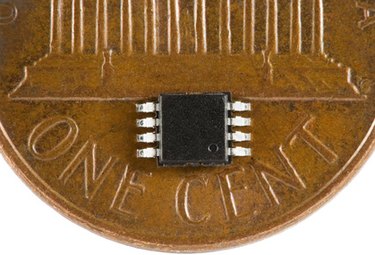

The microchip is the technology underlying much of modern life. Not just your home computer, but your car, stereo, toaster and any number of other tools are likely to feature microchips. The microchip--or "integrated circut"--invented by Jack Kilby in 1958, allowed for the dramatic shrinking that computer technology has undergone since, making the chip a ubiquitous part of the modern life.

Analog and Digital

Video of the Day

The microchips used today come in two basic categories that determines their function in the larger computer structure. Analog chips regulate and increase the strength of the signals they receive from digital chips, which do the actual processing of binary information. To use a metaphor, digital chips act as the brain and analog chips work as the nervous system. Using these two basic types of chips computer makers create systems of ever greater complexity.

Video of the Day

The Microprocessor

Creating the first microprocessors involved basically shrinking an entire early computer onto a single microchip. All of the basic functions that were formerly produced by a large system of separate interconnected circuits were now combined in a single integrated circuit. The microprocessor made possible the personal computer and is now found at the heart of devices such as cell phones. Modern computers use a microprocessor chip to perform all the basic functions of processing data.

Analog Functions

While digital chips such as the microprocessor do the actual sorting of binary numbers, analog chips perform important functions by sorting the signals between different microprocessors and their special outputs. Analog chips make computer design much easier for computer specialists as they can use already expertly created analog chips to connect and regulate the newest digital chips. Some chips have also been created which combine digital and analog functions allowing for even smaller computer sizes.